The first thing I did was setup OpenOCD to handle the output from the processor. I have included my configuration file for OpenOCD below:

## This is the Test Rom Board

## Core429I Board

## STM32F429IGT6 ARM Cortex-M4

## Use the STLINK V2 Debug Probe

source [find interface/stlink-v2.cfg]

## This is setup with Serial Wire Debug

set WORKAREASIZE 0x20000

transport select hla_swd

## Use Generic STM32F4x chip setup support

source [find target/stm32f4x.cfg]

## Reset (software only reset)

reset_config srst_only srst_nogate

## Boost Adapter speed to 4 MHz

adapter_khz 4000

## Configure SWO/SWV

## System Core Clock 180 MHz

## Baud Rate 2 Mbaud/s

tpiu config internal debug.log uart off 180000000 2000000

The important part of this configuration file is the last section, denoted by the Configure SWO/SWV comment. This is all explained very well in the OpenOCD documentation for tpiu config. I modified my configuration to spit the ITM output to debug.log, you need to give the SysCoreClock frequency in Hertz, as well as specify the baud rate.

The next thing you need to do is implement at least the _write system call. I have included my implementation of that method below:

int _write(int file, char *ptr, int len)

{

REQUIRE(file);

REQUIRE(ptr);

ENSURE(len >= 0);

int DataIdx;

switch(file)

{

case STDOUT_FILENO:

case STDERR_FILENO:

for (DataIdx = 0; DataIdx < len; DataIdx++)

{

ITM_SendChar(*ptr++ &(uint32_t)0x01FF);

}

break;

default:

break;

}

return len;

}

If you are curious about the REQUIRE and ENSURE macros, you can find out about them here. The linker options need to include the following to allow you to link against the nano c lib:

-lm --specs=nano.specs --specs=nosys.specs

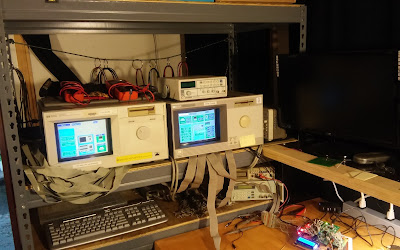

In order to view the SWO/SWV output you need to use a viewer for the file, I am using swo-tracer that was written by Andrey Yurovsky. I tested the whole setup and received a Hello World! out of my embedded code as shown below.